#INTERFACE DYNAMICS

Explore tagged Tumblr posts

Text

USA 1993

28 notes

·

View notes

Text

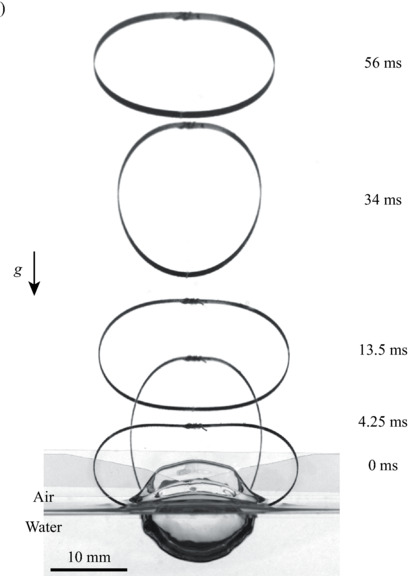

Water Jumping Hoops

Small creatures like springtails and spiders can jump off the air-water interface using surface tension. But larger creatures can water-jump, too, using drag. Here, researchers study drag-based water jumping with a simple elastic hoop. (Image and research credit: H. Jeong et al.) Read the full article

77 notes

·

View notes

Text

*vibrating* new Figmin upd8 in 1 month that has me actually excited to create AR art again

#auropost#we’re getting ai scripting finally#no longer will i have to wrestle with the physics menu#numbers BEGONE#i’ll be able to make a ballon and say ‘hey make this balloon have balloon physics’#and it’ll just happen automatically#will be able to create characters that can be user-controlled#will be able to trigger animations and scene changes dynamically#will be able to create interfaces that users can have conversations with#i’m like. SO full of bees about this#i’ve been waiting so long for it jdhdhsjsjjs#the best part is this will make working in AR so much easier on my body#ai positivity for artists…… it DOES exist……

13 notes

·

View notes

Text

youtube

B Ball – Events indication, Demo Show 2

[ Work in Progress ]

This is platforms. And you knock down platforms. They consist from different colors.

There are skills. Sharp shooter and destroyer.

Indication at the screen about upgoing events, it is all the time, at the screen. You get a skill – indication at the left. Collect 5 things blocks with one color – indication at the left. Diagonal enemy – indication at the right. Diagonal territory. Receive bonus – indication at the right.

Bonus works automatically.

For two points of destroyer – double hit. For 2 collected colors with amount 2 – triple hit. And for two points of sharp shooter – 5 time hit. It raises dynamics.

And three types of enemies. Response hit. When you hit block. Three enemies in a random place. And diagonal enemy. Which fly by diagonal random way.

And, now, it is, already, looks more like retro sci-fi.

Basic Pascal version 1.18 "Duckling" – most newest version. In this version there are 4 new games! Puddles at Countryside, Duckling Pseudo 3D, Road to Countryside, Duckling Goes 2D. And even more retro games! It is a pack of retro games with modern versions of Basic and Pascal.

It is now in development new version Basic Pascal pack games. This game will be included in a new version.

Basic Pascal: http://www.dimalink.tv-games.ru/games/basicpascal/index_eng.html

Website: http://www.dimalink.tv-games.ru/home_eng.html

Itchio: https://dimalink.itch.io/basic-pascal

#QBasic#BBC Basic for SDL 2.0#Programming with Basic#Retro Programming#MS DOS#8 Bit Computers#Retro Game#Devlog#Gamedev#Arcade#Arkanoid#SciFi#Science Fiction#Retro Interface#Smash Platforms#Multicolor Platforms#MSX#Indication#Bonus#Dynamics#Fantastic#80s scifi#8 bit computers#space ship#space suit#Youtube

3 notes

·

View notes

Text

Yesterday I watched "Interface" by UMAMI on suggestion of a friend and I really enjoyed it

great character dynamic between the two main characters Henryk and Mischief

Heavily suggest it to any of you who enjoy storytelling through surreal horror

[its on YouTube so if you have 2 hours to waste]

#spideer'sart#myart#tw clown#i love the character dynamic of a talkative horror and a silent companion#mischief is so fun#and the artstyle is so delightfully scuffed#interface umami#mischief umami#mischief interface#henryk niebieski#henryk interface

14 notes

·

View notes

Text

The Way the Brain Learns is Different from the Way that Artificial Intelligence Systems Learn - Technology Org

New Post has been published on https://thedigitalinsider.com/the-way-the-brain-learns-is-different-from-the-way-that-artificial-intelligence-systems-learn-technology-org/

The Way the Brain Learns is Different from the Way that Artificial Intelligence Systems Learn - Technology Org

Researchers from the MRC Brain Network Dynamics Unit and Oxford University’s Department of Computer Science have set out a new principle to explain how the brain adjusts connections between neurons during learning.

This new insight may guide further research on learning in brain networks and may inspire faster and more robust learning algorithms in artificial intelligence.

Study shows that the way the brain learns is different from the way that artificial intelligence systems learn. Image credit: Pixabay

The essence of learning is to pinpoint which components in the information-processing pipeline are responsible for an error in output. In artificial intelligence, this is achieved by backpropagation: adjusting a model’s parameters to reduce the error in the output. Many researchers believe that the brain employs a similar learning principle.

However, the biological brain is superior to current machine learning systems. For example, we can learn new information by just seeing it once, while artificial systems need to be trained hundreds of times with the same pieces of information to learn them.

Furthermore, we can learn new information while maintaining the knowledge we already have, while learning new information in artificial neural networks often interferes with existing knowledge and degrades it rapidly.

These observations motivated the researchers to identify the fundamental principle employed by the brain during learning. They looked at some existing sets of mathematical equations describing changes in the behaviour of neurons and in the synaptic connections between them.

They analysed and simulated these information-processing models and found that they employ a fundamentally different learning principle from that used by artificial neural networks.

In artificial neural networks, an external algorithm tries to modify synaptic connections in order to reduce error, whereas the researchers propose that the human brain first settles the activity of neurons into an optimal balanced configuration before adjusting synaptic connections.

The researchers posit that this is in fact an efficient feature of the way that human brains learn. This is because it reduces interference by preserving existing knowledge, which in turn speeds up learning.

Writing in Nature Neuroscience, the researchers describe this new learning principle, which they have termed ‘prospective configuration’. They demonstrated in computer simulations that models employing this prospective configuration can learn faster and more effectively than artificial neural networks in tasks that are typically faced by animals and humans in nature.

The authors use the real-life example of a bear fishing for salmon. The bear can see the river and it has learnt that if it can also hear the river and smell the salmon it is likely to catch one. But one day, the bear arrives at the river with a damaged ear, so it can’t hear it.

In an artificial neural network information processing model, this lack of hearing would also result in a lack of smell (because while learning there is no sound, backpropagation would change multiple connections including those between neurons encoding the river and the salmon) and the bear would conclude that there is no salmon, and go hungry.

But in the animal brain, the lack of sound does not interfere with the knowledge that there is still the smell of the salmon, therefore the salmon is still likely to be there for catching.

The researchers developed a mathematical theory showing that letting neurons settle into a prospective configuration reduces interference between information during learning. They demonstrated that prospective configuration explains neural activity and behaviour in multiple learning experiments better than artificial neural networks.

Lead researcher Professor Rafal Bogacz of MRC Brain Network Dynamics Unit and Oxford’s Nuffield Department of Clinical Neurosciences says: ‘There is currently a big gap between abstract models performing prospective configuration, and our detailed knowledge of anatomy of brain networks. Future research by our group aims to bridge the gap between abstract models and real brains, and understand how the algorithm of prospective configuration is implemented in anatomically identified cortical networks.’

The first author of the study Dr Yuhang Song adds: ‘In the case of machine learning, the simulation of prospective configuration on existing computers is slow, because they operate in fundamentally different ways from the biological brain. A new type of computer or dedicated brain-inspired hardware needs to be developed, that will be able to implement prospective configuration rapidly and with little energy use.’

Source: University of Oxford

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#algorithm#Algorithms#Anatomy#Animals#artificial#Artificial Intelligence#artificial intelligence (AI)#artificial neural networks#Brain#Brain Connectivity#brain networks#Brain-computer interfaces#brains#bridge#change#computer#Computer Science#computers#dynamics#ear#employed#energy#fishing#Fundamental#Future#gap#Hardware#hearing#how

2 notes

·

View notes

Text

i can't find the post now so maybe i've made it up entirely, who's to say, but i feel like i remember seeing a post about saccharina saying that she expects too much for the rest of the Rocks to treat her like family, but now that i'm actually watching the season, dude, she says multiple times that she doesn't care if they treat her like Family, she really just wants them to be decent to her and they refuse to even grant her that much

#N posts stuff#like the big confrontation i'm at now is bc Saccharina made a move to Help Ruby in this fight and then when she mentions it verbally#Ruby responds to that by entertaining the notion of shoving Saccharina off a cliff bc it pisses her off so much to acknowledge that#<- that's not Saccharina demanding everyone play 'happy family' that's her helping an ally and getting spit on for it#if Ruby and Amathar don't want to interface with Saccharina as family they shouldn't have to; but that doesn't mean that they get#to shit on HER for representing obligation they don't want; they could Just Be Civil to her instead#and ruby saying 'you can be my sister or my queen but you can't have both' when saccharina has Reiterated that she doesn't even Want#to be a queen is like. again i get where Ruby is coming from this is not a bash on her emotional state so much as it is an attempt#to comment on fan Reactions to the dynamics here - ruby is Putting saccharina in a dichotomy she does not want to be in and is#Projecting a dynamic that does not inherently exist; saccharina wasn't looking for a sister in that moment she was just asking for#an Acknowledgement of her efforts and asking someone else to give her some effort back; the way any teammate would want#and yeah when ruby comes up to her and doesn't apologize just says 'you're asking too much of me' i don't think saccharina saying#'hey i get that but i was truly Excited to meet you' is a demand for sisterhood; it's just 'i was really excited to meet you#and instead of being nice you're Just constantly shitting on me' -> that's a comment on why She's so fucked up about ruby's harshness#not a demand for ruby to start liking her; do you see what i mean? like am I making sense here? lol#i mean i still have a few eps left maybe there's still some conversations i haven't seen yet but. you know

4 notes

·

View notes

Text

youtube

新しいユーザーインターフェイスLiquid Glassの紹介 - WWDC25

Liquid Glassは、Appleプラットフォームのデザイン言語を統一しながら、よりダイナミックで魅力的なユーザー体験を提供します。Liquid Glassのデザイン原則、光学的および物理的な主要特性、Liquid Glassの用途とメリットについて説明します。

Mac OS XのAquaユーザーインターフェイス。iOS7のリアルタイムのぼかしからiPhone Xの滑らかな反応、Dynamic Islandの柔軟性のフルイドインターフェイス。

Vision OSのイマーシブインターフェイスまでに至る進化を基盤にしています。

物理世界の物体を単に再現するだけのアプローチから一歩進んで、動的に光を屈折させ形成するデジタルメタ素材「Liquid Glass」を生み出しました。

またLiquid Glassは、有機的な動作と移動により軽やかな液体のような印象を与え、タッチ操作の柔軟性とモダンアプリのダイナミズムの両方に反応します。

Liquid Glassは、より丸みを帯びイマーシブ感を生み出すようになった。Appleのプロダクトデザインの進化を補完します。

このデザインは丸みがあり浮かんでいるような印象を与え、最新のデバイスの滑らかなカーブにすんなり収まります。

形状が明確に定義されているためタップしやすく人間の指の自然な幾何学的特性を反映したデザインであるためタッチ操作がしやすくなっています。

Liquid Glassの性質を表す最も明確な特徴は、レンズ効果です。レンズ交換は、私たちが自然界において日常的に体験する現象です。

私たちは、そうし���体験を通じて透明な物体の存在、動き、形状の知覚に影響する。光の歪みや屈折を直感的に知っています。

Liquid Glassは、私たちが生得的に把握できる視覚情報に基づいて物体を分離し、新しい方法でレイヤーを表現しながらその下にあるコンテンツが透けて見えるようにします。

従来の素材は光を散乱させましたが、この新しい素材のセットは、リアルタイムで光を動的に屈折、形成、収束します。

これにより背景のコンテンツとの関係で対象の性質が設定される一方、自然界での体験に根ざした視覚的印象を与えることができます。

このような光の造形によりコントロールに再軽量、かつ、透明な雰囲気をもたらしつつ、視覚的に区別しやすくしています。

Liquid Glassのオブジェクトはフェードする代わりに光の屈折とレンズ交換を徐々に変えることで具現化または消失します。

そのため、素材の工学的な整合性を維持しつつ、緩やかに移行することができます。

素材の感触や動作は見た目と同じ位重要です。Liquid Glassは、その根本において外観の動きの両方を1つのものと捉えて設計されています。

私たちは液体の動きがどんな感じか直感的に知っています。スムーズで、反応性に優れた滑らかな移動と動作と言う特性は、物理世界に対する私たちの生得的な知覚と一致するインターフェイスを設計する上で参考になります。

これを実現するためLiquid Glassは操作に対して即座に変形し、光の活力を帯びることで反応します。それが反応性に優れ、満足感とはつらつとした印象を与えるインターフェースを実現します。

またLiquid Glassは、本質的にゲルのような柔軟性を備えており滑らかで拡張しやすい性質を表現しながらユーザの操作に合わせてスムーズに動きます。

この滑らかさは、今日のユーザの思考や動きを特徴づけるダイナミズムと絶え間ない変化にインターフェイスの使用感を一致させます。

コンポーネントを操作した時などにUI要素を浮かび上がらせてLiquid Glassに変化させることもできます。操作しない時は、平静でタッチすると活力が生まれるような印象になります。

これは特にコントロールなどで、有効で透明な液体レンズを通してその下の値がはっきりと見える状態になります。この滑らかな使用感は素材が入力にどう反応するかだけでなくモダンアプリにおける動的な環境の変化にどう反応するかにも生かされます。

アプリで各状態の間を移動するとLiquid Glassはコンテキストに応じてコントロール間で動的に変形します。これにより1枚の浮き上がった平面にコントロールが配置されているというコンセプトを常に表現できます。

アプリの様々なセクション感の移行も、コントロールが絶えず、変形するため滑らかでシームレスな印象になります。メニューを表示するとバブルが弾むように開いて、内容のコンテンツを確認できます。

この軽量なインラインの移行によりタップした場��に全てが表示されます。これによりボタンと内容のコンテンツの関係が非常に明確かつ直接的に伝わります。

以上の特性を備えたLiquid Glassによりアプリの外観は、新時代を迎えますがそれはまた使用感のイノベーションでもあります。

新しい方法により光を屈折させ、成形する機能と動的に変形し、形状を生み出す機能を融合することでLiquid Glassはアプリの使用体験を根本的に有機的でもっとイマーシブ感があり、もっと流動的なものへと変革するようにデザインされています。

<おすすめサイト>

Designing Fluid Interfaces - WWDC 2018 - Videos - Apple Developer

0 notes

Text

Coming up with the acronym took longer than fixing the time display bug.

0 notes

Text

SciTech Chronicles. . . . . . . . . . . . . . . . . . . . .Jan 21, 2025

#underground#bacteria#archaea#methanogenic#Quebec#aggression#antisocial#boys#predicted#glymphatic#clearance#norepinephrine#brain#fluid#dynamics#BrainGate2#Neural#Interface#cursor#finger#independently#damage#coral#monitoring#flattened#axis

1 note

·

View note

Text

Why Analog Gear for Mastering? Because Digital Can't Hug!

In the ever-evolving world of music production, the debate between analog and digital gear rages on like a two-headed monster with a penchant for sound. While digital technology has revolutionized the way we create and manipulate audio, there’s something undeniably charming about the warmth and character of analog equipment—especially when it comes to mastering. If you’ve ever felt that your mix…

#analog gear#art#compression#connection#digital interfaces#digital mastering#digital platforms#digital PR#digital processing#digital technology#distortion#dynamic range#EQ#evolution#harmonic distortion#harmonics#hope#joy#mastering#meditation#music#music production#reel-to-reel#revolution#reward#sound#time

0 notes

Link

91 notes

·

View notes

Text

Ready to take your content to the next level?

Fliki AI will blow your mind! Imagine transforming a brilliant blog post, witty tweet, or stellar presentation into dynamic videos effortlessly.

Fliki AI —your ultimate content creation sidekick. With its cutting-edge AI-powered platform, we can turn text into engaging videos and voiceovers in no time.

Experience voice cloning that sounds just like you!

With an extensive stock media library and user-friendly interface, Fliki is perfect for marketers, educators, and social media gurus alike. Dive into the world of Fliki AI and let our ideas come to life!

#Fliki AI

#ContentCreation

#fliki AI#content creation#video transformation#digital content#AI tools#voice cloning#stock media library#user-friendly interface#elevate content#marketing tools#social media videos#video editing#engaging videos#dynamic presentations#content marketing#AI technology#creative content#video production#online education#digital marketing#content strategy#video voiceovers#innovative tools#blog to video#presentation videos#social media content#video creation#AI revolution#video magic#content ideas

1 note

·

View note

Text

DPAD Algorithm Enhances Brain-Computer Interfaces, Promising Advancements in Neurotechnology

New Post has been published on https://thedigitalinsider.com/dpad-algorithm-enhances-brain-computer-interfaces-promising-advancements-in-neurotechnology/

DPAD Algorithm Enhances Brain-Computer Interfaces, Promising Advancements in Neurotechnology

The human brain, with its intricate network of billions of neurons, constantly buzzes with electrical activity. This neural symphony encodes our every thought, action, and sensation. For neuroscientists and engineers working on brain-computer interfaces (BCIs), deciphering this complex neural code has been a formidable challenge. The difficulty lies not just in reading brain signals, but in isolating and interpreting specific patterns amidst the cacophony of neural activity.

In a significant leap forward, researchers at the University of Southern California (USC) have developed a new artificial intelligence algorithm that promises to revolutionize how we decode brain activity. The algorithm, named DPAD (Dissociative Prioritized Analysis of Dynamics), offers a novel approach to separating and analyzing specific neural patterns from the complex mix of brain signals.

Maryam Shanechi, the Sawchuk Chair in Electrical and Computer Engineering and founding director of the USC Center for Neurotechnology, led the team that developed this groundbreaking technology. Their work, recently published in the journal Nature Neuroscience, represents a significant advancement in the field of neural decoding and holds promise for enhancing the capabilities of brain-computer interfaces.

The Complexity of Brain Activity

To appreciate the significance of the DPAD algorithm, it’s crucial to understand the intricate nature of brain activity. At any given moment, our brains are engaged in multiple processes simultaneously. For instance, as you read this article, your brain is not only processing the visual information of the text but also controlling your posture, regulating your breathing, and potentially thinking about your plans for the day.

Each of these activities generates its own pattern of neural firing, creating a complex tapestry of brain activity. These patterns overlap and interact, making it extremely challenging to isolate the neural signals associated with a specific behavior or thought process. In the words of Shanechi, “All these different behaviors, such as arm movements, speech and different internal states such as hunger, are simultaneously encoded in your brain. This simultaneous encoding gives rise to very complex and mixed-up patterns in the brain’s electrical activity.”

This complexity poses significant challenges for brain-computer interfaces. BCIs aim to translate brain signals into commands for external devices, potentially allowing paralyzed individuals to control prosthetic limbs or communication devices through thought alone. However, the ability to accurately interpret these commands depends on isolating the relevant neural signals from the background noise of ongoing brain activity.

Traditional decoding methods have struggled with this task, often failing to distinguish between intentional commands and unrelated brain activity. This limitation has hindered the development of more sophisticated and reliable BCIs, constraining their potential applications in clinical and assistive technologies.

DPAD: A New Approach to Neural Decoding

The DPAD algorithm represents a paradigm shift in how we approach neural decoding. At its core, the algorithm employs a deep neural network with a unique training strategy. As Omid Sani, a research associate in Shanechi’s lab and former Ph.D. student, explains, “A key element in the AI algorithm is to first look for brain patterns that are related to the behavior of interest and learn these patterns with priority during training of a deep neural network.”

This prioritized learning approach allows DPAD to effectively isolate behavior-related patterns from the complex mix of neural activity. Once these primary patterns are identified, the algorithm then learns to account for remaining patterns, ensuring they don’t interfere with or mask the signals of interest.

The flexibility of neural networks in the algorithm’s design allows it to describe a wide range of brain patterns, making it adaptable to various types of neural activity and potential applications.

Source: USC

Implications for Brain-Computer Interfaces

The development of DPAD holds significant promise for advancing brain-computer interfaces. By more accurately decoding movement intentions from brain activity, this technology could greatly enhance the functionality and responsiveness of BCIs.

For individuals with paralysis, this could translate to more intuitive control over prosthetic limbs or communication devices. The improved accuracy in decoding could allow for finer motor control, potentially enabling more complex movements and interactions with the environment.

Moreover, the algorithm’s ability to dissociate specific brain patterns from background neural activity could lead to BCIs that are more robust in real-world settings, where users are constantly processing multiple stimuli and engaged in various cognitive tasks.

Beyond Movement: Future Applications in Mental Health

While the initial focus of DPAD has been on decoding movement-related brain patterns, its potential applications extend far beyond motor control. Shanechi and her team are exploring the possibility of using this technology to decode mental states such as pain or mood.

This capability could have profound implications for mental health treatment. By accurately tracking a patient’s symptom states, clinicians could gain valuable insights into the progression of mental health conditions and the effectiveness of treatments. Shanechi envisions a future where this technology could “lead to brain-computer interfaces not only for movement disorders and paralysis, but also for mental health conditions.”

The ability to objectively measure and track mental states could revolutionize how we approach personalized mental health care, allowing for more precise tailoring of therapies to individual patient needs.

The Broader Impact on Neuroscience and AI

The development of DPAD opens up new avenues for understanding the brain itself. By providing a more nuanced way of analyzing neural activity, this algorithm could help neuroscientists discover previously unrecognized brain patterns or refine our understanding of known neural processes.

In the broader context of AI and healthcare, DPAD exemplifies the potential for machine learning to tackle complex biological problems. It demonstrates how AI can be leveraged not just to process existing data, but to uncover new insights and approaches in scientific research.

#ai#algorithm#Analysis#applications#approach#arm#Article#artificial#Artificial Intelligence#background#BCI#Behavior#Brain#brain activity#brain signals#Brain-computer interfaces#Brain-computer interfaces (BCIs)#brains#california#challenge#code#communication#complexity#computer#data#Design#development#devices#disorders#dynamics

0 notes

Text

half baked morning rant

I do want to make it clear that the reason I talk about HRT and its biological effects so much is not because HRT or medicalization defines your gender.

Its because, for me personally, the interface of my biology education and my transition was mostly centered around figuring out what sex hormones do. I learned about basic biology principles like DNA organization, gene regulation, cell biology, and physiology in high school and undergrad. Taking that understanding and extending it to the mechanisms that hormones use to change gene regulation, and by extension, the rest of your body broadly, was something I did as my understanding became more complete in later undergrad and grad school. It was the key to me starting my own transition.

Why?

Because it was the first time I realized that the "basic biology" arguments of transphobes were complete and utter bullshit. From that point, it was a cascade. As in, wait, if dynamic changes in gene expression aren't considered "biological" to them, then why am I believing anything they say about anything else? When they talk about gametes, and try to include infertile cis people in their definitions of biological sex by talking about what gamete you're "intended" to make, what do they even mean? Why does my current gene expression not define that "intent"? And wait, back up, why is the brain suddenly not considered part of our biology? Why are neurological differences suddenly not "biological"? Why can we say someone's thinking patterns aren't "biological"?

Backing up even further, why does any of this matter more than psychological gender, or sociological gender? If the way we navigate society is gendered, that affects a lot of our lives, and we're just throwing that away?

Basically, being educated about how deep the biological changes of HRT really go was the first domino to fall when I worked through my internalized transphobia.

This is one of many reasons why I hate, hate HATE the concession that uninformed allies and even many trans people themselves give: "well NO ONE is saying that you can change your biological sex, sex and gender are completely unrelated, sex is binary and gender isn't!!!!!"

Well. I am saying that you can change your "biological" sex, I am saying that biological sex isn't binary, and I am saying that misunderstanding of those points has set back transgender advocacy. It makes medical decisions surrounding us less informed, it poisons conversations about how we interact with society, and it makes trans people feel like their gender and sex are less "real" than cis people's.

Not to mention the horrific way it discards intersex people from the conversation entirely.

Recently, I've seen this point enter the mainstream a little, by using intersex people and variation of sex in other species as a "counterargument" to "binary biological sex" thinking. It still doesn't sit right with me. One, because it uses intersex people as a prop for trans advocacy while not actually addressing the needs of either group. And two, because it completely disregards that your current biology and physiology is not 100% predestined from birth, and using people who were "born this way" as a prop does absolutely nothing to increase people's acceptance of trans people who change their biology later in life.

Ugh. This got away from me but yeah. That's my sipping coffee ramble for this morning. If anyone wants to add comment or correct me on discourse here, please do. Especially if you're intersex- this is all the observations of a perisex trans woman.

4K notes

·

View notes

Text

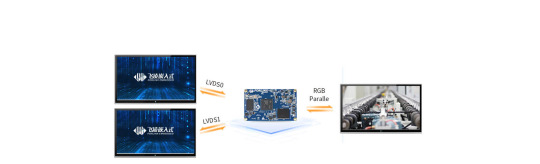

Display and Modification of LVDS Display Interface on AM62x Development Board

1. LVDS Interface Specification

Forlinx Embedded OK6254-C development board provides 2 x 4-lane LVDS display serial interfaces supporting up to 1.19Gbps per lane; the maximum resolution supported by a single LVDS interface is WUXGA (1920 x 1200@60fps, 162MHz pixel clock).

In addition, the interface supports the following three output modes:

(1) Single-channel LVDS output mode: at this time, only 1 x LVDS interface displays output;

(2) 2x single-channel LVDS (copy) output mode: in this mode, 2 x LVDS display and output the same content;

(3) Dual LVDS output mode: 8-lane data and 2-lane clock form the same display output channel.Forlinx Embedded OK6254-C development board is equipped with dual asynchronous channels (8 data, 2 clocks), supporting 1920x1200@60fps. All signals are by default compatible with Forlinx Embedded's 10.1-inch LVDS screen, with a resolution of 1280x800@60fps.

2. Output Mode Setting

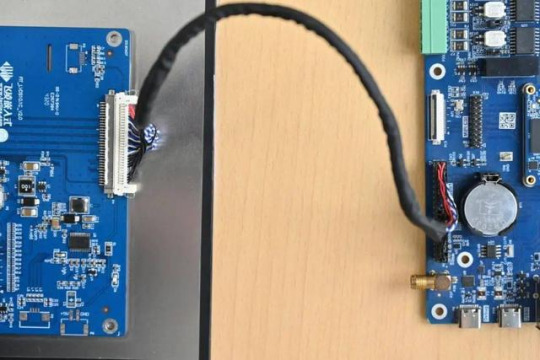

(1) Single LVDS output mode:

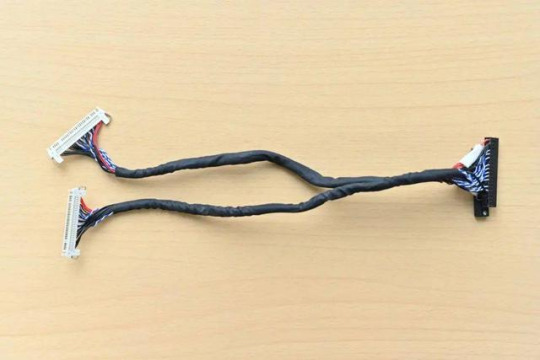

We need a single LVDS screen cable. The black port of the cable is connected to the embedded OK6254-C development board, and the white port is connected to the embedded 10.1-inch LVDS display screen. Connection method as shown in the figure below:

Note that the red line section corresponds to the triangle position, so don't plug it in wrong.

(2) 2x single LVDS (duplicate) output mode:

This mode uses the same connections as the Single LVDS Output Mode. Two white ports link to two 10.1-inch LVDS screens from Forlinx Embedded, and a black port on the right connects to the OK6254-C board's LVDS interface for dual-screen display.

(3) Dual LVDS output mode:

The maximum resolution supported by a single LVDS interface on the OK6254-C development board is WUXGA (1920 x 1200@60fps). To achieve this high-resolution display output, dual LVDS output mode is required.

It is worth noting that the connection between the development board and the screen in this mode is the same as in [Single LVDS Output Mode], but the LVDS cable's and the screen's specifications have been improved.

3. Screen Resolution Changing Method

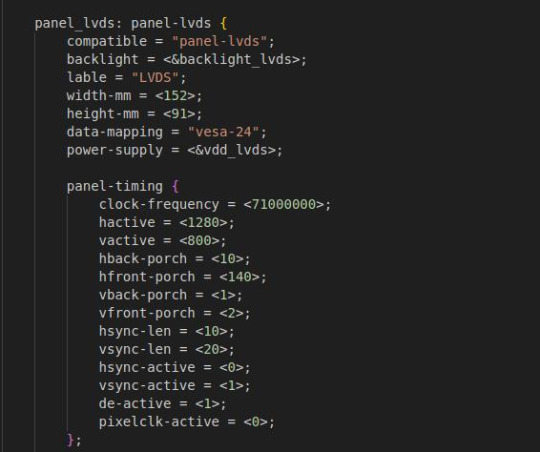

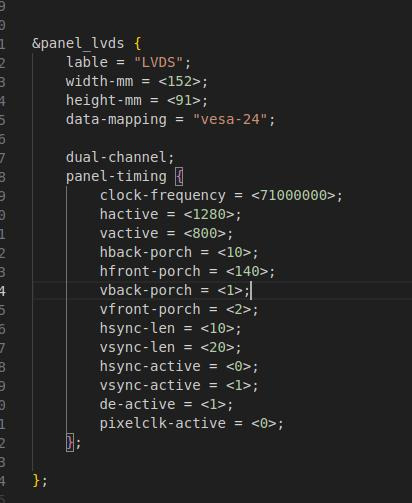

OK6254-C development board device tree is easy to modify, we need to open the OK6254-C-lvds.dts (single 8-way configuration) and OK6254-C-lvds-dual.dts (dual 8-way configuration) files.

Open OK6254-C-lvds.dts

Open OK6254-C-lvds-dual.dts

The above figure is the single LVDS and dual LVDS screen resolution information, the default resolution of 1024 * 600, and the maximum resolution support of 1920x1200, you can modify the corresponding parameters according to the Screen User’s Manual.

4. Compilation Configuration

Because we only modified the device tree, we don't need a full compilation. After compiling the kernel, a new Image and multiple device tree files will be generated in the images directory. Here we only need to compile the kernel separately.

(1) Switch directory: cd OK6254-linux-sdk/

(2) Execution environment variables:.. build.sh

(3) Execute the instructions that compile the kernel separately: sudo./build. Sh kernel.

(4) Pack all the device tree files to the development board /boot/ directory and replace them, then sync save and reboot scp images/OK6254-C* [email protected]:/boot/

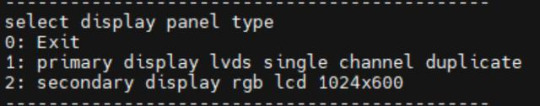

We have modified the corresponding file. How should we select the screen after replacing it? At present, there are three kinds of screen switching control methods: kernel device tree designation, Uboot menu dynamic control, Forlinx Desktop interface and Uboot menu application. Today, I will briefly introduce the dynamic control of Uboot menu.

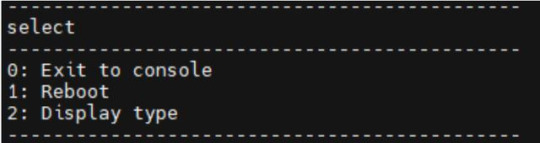

During Uboot, pressing the space bar will take you to the Uboot menu. There are three options in the menu:

Enter 0 to enter the Uboot command line;

Enter 1 to restart Uboot;

Enter 2 to enter the Display Configuration menu.

There are three options in the menu:

Enter 0 to return to the previous menu;

Enter 1 will toggle what option 1 displays to configure Screen 1 LVDS; Note: Screen 1 supports single LVDS, dual LVDS, and off (i.e., LVDS off)

Enter 2 to toggle the display of option 2 to configure the Screen 2 LCD. Note: Screen 2 supports 1024 * 600 resolution LCD screen, 800 * 480 resolution LCD screen and off (i.e. RGB off)

When selecting the LVDS screen, we enter 1 to select single 8-channel LVDS or dual 8-channel LVDS.

After selecting the desired configuration, enter 0 to return to the previous menu level. Restart Uboot or enter the command line to start the system, which can make the screen settings take effect. For other resolution screens, please modify the kernel device tree screen parameters according to the screen parameter requirements.

Originally published at www.forlinx.net.

#LVDS Interface#Forlinx Embedded#OK6254C#Screen Resolution Changing Method#Compilation Configuration#Uboot menu dynamic control

0 notes